Analysing Mainstream Media Headlines for Electric Vehicles

Jump to section

Last Updated: Wednesday, August 28, 2024 10:39 AM - Data up to Sunday, 28 January, 2024

Abstract

News media play an indispensable role in disseminating information and shaping public perception during times of crisis, through technological transitions and more. The media plays a crucial role in shaping public opinion, and headlines are often the first point of contact for readers. However, research has shown that many people form their opinions based on the headline alone, without reading the full article (Kwak et al., 2010 - Breaking the News: First Impressions Matter on Online News). This phenomenon is often referred to as 'headline-reading,' where individuals react to the attention-grabbing title rather than taking the time to read the entire piece. As a result, headlines have become incredibly influential in shaping public perception and can significantly impact how people think about and respond to various topics.

In the context of electric vehicles, this is particularly concerning. Headlines that emphasise negative aspects, such as range anxiety or high costs, may lead readers to form a biased view of EVs without considering the benefits they offer. Conversely, headlines that highlight the environmental advantages of EVs may inspire readers to adopt more sustainable transportation options.

Headlines have a ripple effect, shaping not only individual opinions but also policy decisions and business strategies.. For instance, news coverage that focuses on the limitations of EV charging infrastructure may lead policymakers to prioritize investment in traditional fossil fuel-based energy sources, rather than exploring alternative solutions. Similarly, headlines that tout the benefits of electric vehicles may inspire businesses to invest in EV-related technologies, driving innovation and job creation.

A study by Dr. Robert Cialdini (1993) found that using emotional headlines can significantly impact public perception. He demonstrated that emotionally charged headlines can increase people's willingness to take action.

The most effective appeals are those that tap into the deep-seated emotions of the audience.

—Cialdini, R. B. (1993)

Influence: The Psychology of Persuasion. Morrow.

This study integrates three key analytical approaches: sentiment analysis, sentiment bias evaluation, and time-series analysis. I drew upon a massive dataset of 1.9 million headlines, collected over six months from top UK news sources, to inform the analysis and shed light on the electric vehicle reporting landscape.

Our analysis reveals a staggering finding: an alarming 50% of electric vehicle headlines are negative, highlighting the need for balanced reporting and greater public understanding. The study identifies that certain notable publishers making extensive use of unsubstantiated claims and concerns to promote this negative rhetoric. This is further exacerbated by the volume of new anti EV headlines increasing significantly during periods of peak sales for new vehicles, when readers would potentially be considering the purchase of a new vehicle.

In isolation a news media outlet promoting a negative story about electric vehicles would not be something worthy of note. Our analysis exposes a disheartening truth: despite the urgent need for accurate information, many news outlets are perpetuating myths and misconceptions about electric vehicles, leaving consumers in the dark.

A study by Dr. Philip E. Tetlock (1985) explored how connotative headlines can influence public perception. He found that using connotative language in headlines can shape people's attitudes and beliefs.

The power of words is not just a metaphor; it's a reality. The way we frame an issue can shape the way people think about it.

—Dr. Philip E. Tetlock

Influence: The Psychology of Persuasion. Morrow.

This report classifies Headline Sentiment applied using Natural Language Processing, Large Language Models, the Chat GPT commercial LLM and hand tuned sentiment classification to develop a comprehensive analysis of the media landscape in the UK and how electric vehicles are portrayed in their headlines.

As we embark on this journey of discovery, we can't help but ask: what does it take to create a more informed and environmentally conscious society? this study offers some answers.

Top

Project Introduction

The goal of this research is to investigate how the media portrays electric vehicles and what kinds of messages they convey to readers. Specifically, I aim to analyse the themes, sentiment biases, and patterns in electric vehicle-related headlines across different publications.

Research Questions

Investigate how the media portrays electric vehicles and what kinds of messages they convey to readers?

What are the common themes of headlines found in the data across different publications, and what is the sentiment bias (negative, positive, or neutral) towards electric vehicles in these headlines?

- What are the most common themes or topics covered in EV-related headlines across different publications?

- Are there any notable differences in the tone or sentiment of EV headlines between mainstream newspapers and online news sources?

- Do certain types of publications (e.g. national vs local, liberal vs conservative) tend to have more positive or negative sentiments towards electric vehicles?

- Is there a correlation between the popularity or reach of a publication and its sentiment bias towards electric vehicles?

- Are there any specific events or developments that appear to influence the tone or content of EV-related headlines?

How do electric vehicle-related headlines persist in online search results compared to general news headlines, and what does this persistence reveal about the media's attention span and public interest in EVs?

- How much attention electric vehicle-related news receives online.

- Whether there is a bias towards promoting or sensationalizing EV news.

- What factors contribute to the longevity of an EV headline's presence in search results.

What patterns or correlations exist between the volume and timing of electric vehicle-related headlines, and how do these patterns relate to market trends or consumer behavior?

- Is there a relationship between the frequency of EV-related headlines and market trends or consumer behavior?

- Are the headlines themselves influencing public perception or purchasing decisions?

- What insights can be gained by analysing the timing and volume of EV headlines alongside market data?

What is the sentiment bias (positive, negative, or neutral) towards electric vehicles in mainstream media headlines, and what do these biases reveal about public perception, industry trends, or societal attitudes towards EVs?

- Categorising headlines as positive, negative, or neutral based on their content.

- Analysing the frequency and distribution of these biases across different media sources, topics, or time periods.

- Investigating whether there are any correlations between the sentiment bias and market trends, consumer behavior, or industry developments.

Is there a correlation between the sentiment analysis of electric vehicle-related headlines in mainstream media outlets and the independent ratings provided by Media Bias Fact Check?

- Is there a significant correlation between the sentiment bias detected in EV headlines and the overall rating provided by Media Bias Fact Check?

- Are there any notable exceptions or inconsistencies between the two metrics?

- Do certain publications tend to have a consistent sentiment bias across different topics, including electric vehicles?

Top

Objective

The world is on the cusp of a significant transformation in transportation, with electric vehicles (EVs) poised to revolutionize the way we travel. Governments and industries around the world are setting ambitious targets to reduce greenhouse gas emissions and combat climate change, making electric vehicles (EVs) a crucial solution in response.

However, the transition to electric vehicles is not without its challenges. Media outlets shape public opinion and influence consumer behavior, playing a vital role in the process. As such, it's essential to examine how news outlets are reporting on electric vehicles, particularly in regards to their potential benefits and drawbacks.

I aim to investigate the mainstream media's coverage of electric vehicles, exploring common themes, biases, and messaging strategies employed by various news sources, to better understand their impact. By analysing these trends, I aim to contribute to a deeper understanding of the role that mass media can play in facilitating or hindering the widespread adoption of electric vehicles.

I examine the sentiment and trends of electric vehicle (EV) headlines in mainstream media, using a massive dataset of over 1.9 million web-scraped headlines. A variety of methods were used to to assign sentiment labels to each headline, providing a gold standard for evaluating the performance of automated sentiment analysis tools. The results reveal significant biases and patterns in the coverage of EVs, including the dominance of negative sentiments and the amplification of misinformation by media outlets.

Corresponding with the observed trends in media coverage, an analysis of the correlation between the volume of negative headlines and new vehicle sales suggests a potential impact on public opinion and purchasing decisions (see Headline Timing Analysis for more information). The study highlights the limitations of current sentiment analysis tools for this domain and proposes alternative approaches using topic modelling and entity recognition techniques to better understand the complex context and nuances of EV-related discourse.

This research contributes to the ongoing discussion about the role of media in shaping public perception of emerging technologies, while also providing insights for stakeholders in the electric vehicle industry on how to address misinformation and promote a more balanced narrative.

Top

Methodology

Scope of analysis

As part of my research, I designed and implemented a web scraping script that systematically collected headlines from the search results of UK mainstream media news outlets' websites. This automated process was executed every five minutes over a six-month period, spanning from August 2, 2023 to January 28, 2023.

Web scraping is a way for computers to extract information from websites without actually browsing them like we do. Imagine you're looking through a big library and you need to find all the books with a specific title or author. Instead of searching through each shelf one by one, you could ask a friend to help you by making a list of all the relevant book titles for you. That's basically what web scraping does! It uses special software that can "read" websites like a computer program would read text, and then extracts specific information from those sites - like headlines, prices, or even whole articles.

Our research offers a crucial window into media outlet reporting for policymakers, business leaders, and the general public – shedding light on the critical role that media portrayals play in shaping attitudes towards electric vehicles.

Observations collected for each publication

| month | August | September | October | November | December | January |

|---|---|---|---|---|---|---|

| Publication | ||||||

| BBC | 6,386 | 10,066 | 10,416 | 10,066 | 10,356 | 9,138 |

| Express | 4,670 | 7,200 | 7,450 | 1,550 | 0 | 0 |

| The Daily Mail | 115,460 | 173,243 | 174,171 | 162,836 | 139,897 | 119,457 |

| The Daily Star | 18,056 | 27,862 | 28,807 | 27,761 | 28,532 | 25,218 |

| The Economist | 4,848 | 7,200 | 7,450 | 7,190 | 7,370 | 6,520 |

| The Independent | 45,970 | 73,236 | 74,388 | 62,622 | 80,875 | 72,372 |

| The Spectator | 18,660 | 28,760 | 29,780 | 28,760 | 29,640 | 26,080 |

| The Sun | 5,604 | 8,730 | 8,904 | 8,740 | 8,892 | 7,812 |

| The Telegraph | 18,174 | 28,002 | 29,016 | 28,041 | 28,860 | 25,424 |

| The Times | 4,670 | 7,200 | 7,440 | 7,190 | 7,390 | 6,530 |

Top

Observations

Each data point includes the date, time, headline (and edits to the headlines), and the publication source, providing a robust basis for analysis. In total 1.9 million observations were recorded during the six month period from August to January.

Search Results often included non Electric Vehicle Related Topics, these were retained to provide some comparative analysis of General News to EV Headlines.

EDA

Extensive Exploratory Data Analysis and Data Cleansing was carried out to produce a clean source dataset to work with.

Feature Engineering

Feature Engineering was used to create additional columns and supplemental datasets

See the Meta Data Overview and Feature Engineering section for more information on Meta Data, EDA, Cleansing and Feature Engineering.

Top

Sentiment Analysis

NLP Sentiment Analysis

Leveraged Natural Language Processing (NLP) techniques, specifically using the TextBlob library, to classify the sentiment of each headline as positive, negative, or neutral.

The results from Textblob were poor quality and, in my opinion, unusable for analysis.

Further investigation of the discrepancy led me to these conclusions:

- Textblob uses simple rules based analysis and is simply unable to process linguistic cues, sarcasm, irony, or figurative language that are common in mainstream media headlines

- Headlines often contain context-specific information, such as references to specific events, people, or trends. TextBlob might not be able to fully understand the context, which can lead to mis-classifications.

- Some headlines may contain ambiguous language that can be interpreted as both positive and negative depending on the reader's perspective. TextBlob might struggle with these ambiguities,

- Electric vehicles (EVs) are a relatively new and rapidly evolving field. TextBlob may not have been trained on enough data to understand the specific domain specific knowledge including: concerns, benefits, or controversies surrounding EVs.

LLM Analysis

Utilised the Meta Llama 3.1 Large Language Model to perform a more nuanced sentiment and contextual analysis, identifying subtle biases and underlying tones in the headlines that might not be captured by traditional sentiment analysis tools.

This produced results much closer to the hand tuned rating with a closer ratio of negative headlines being identified by the LLM Vs Hand Tuned. However the LLM identified less of the positive headlines, more often classifying them as Neutral rather than positive. the results were a significant improvement over TextBlob and with further training could become quite usable.

Hand-Tuned Analysis

I resorted to conducting a manual annotation review and used human judgement (me) on the data to validate the accuracy of automated methods and to fine-tune the sentiment classification algorithms.

This has the potential to introduce personal bias, I set out to reduce this potential by hiding the publication source, date and time through the review. I am obviously biased, however the quality of the resulting dataset aligned better with the sentiment of the headlines, in my opinion.

For further information on the bias risk see Sentiment Analysis - Bias Risk

Top

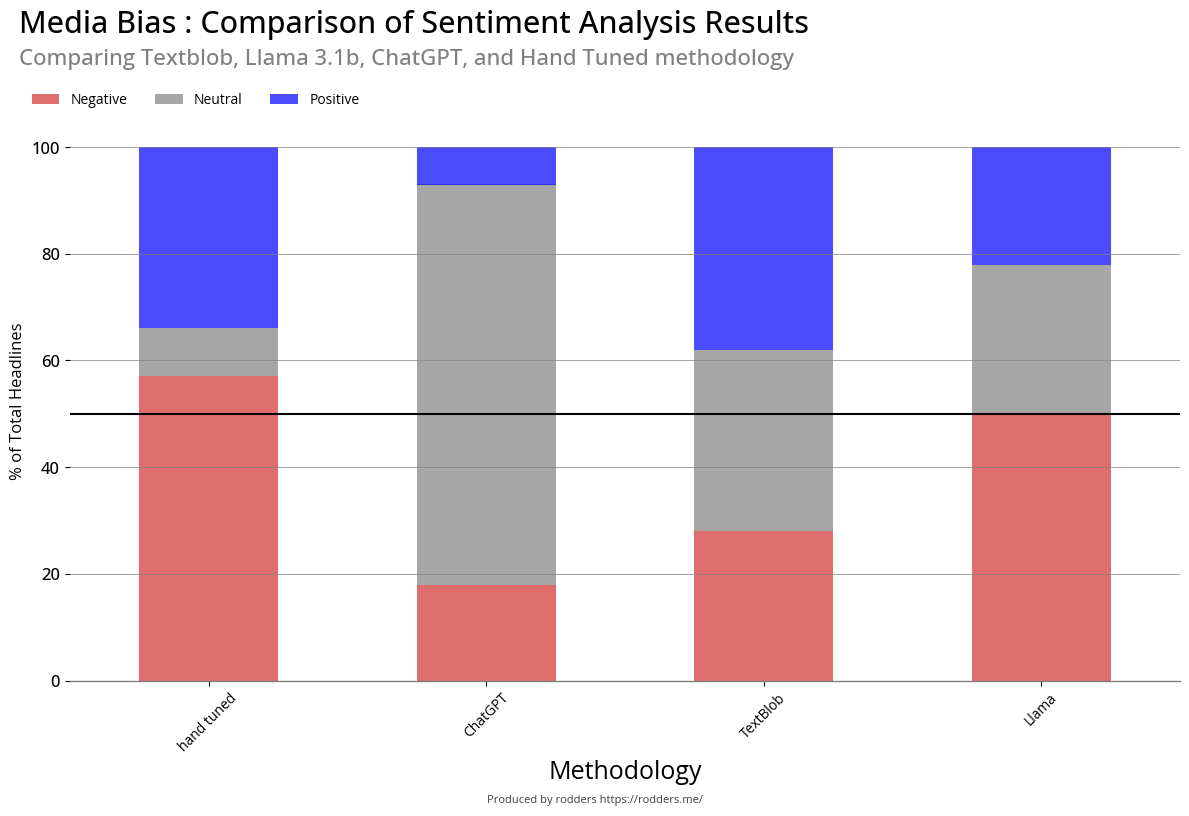

Results of Sentiment Analysis

Unique Headlines were used as the source, this was filtered to the EV terms : "Charger", "Charging", "EV", "Electric Vehicle", "Electric Car", before processing.

| title | Negative | Neutral | Positive |

|---|---|---|---|

| hand tuned | 57% | 9% | 34% |

| Llama | 51% | 28% | 21% |

| ChatGPT | 18% | 75% | 7% |

| TextBlob | 28% | 34% | 38% |

In using the "human judgement" approach, I've taken a closer look at each individual headline and provided a subjective rating (positive, neutral, or negative) based on my understanding and interpretation. This is a labour-intensive process that requires human expertise, attention to detail, and contextual understanding.

By doing so, I've effectively created a high-quality, manually curated dataset that can be used as a gold standard for evaluating the performance of other sentiment analysis models, including Llama 3.1b LLM and Python Textblob. This approach allowed me to assess the accuracy and reliability of these automated tools and identify areas where they may not be effective.

In contrast to automatic sentiment analysis methods, manual annotation provides a more nuanced and accurate understanding of the sentiment behind each headline. However, it's important to note that this process can be time-consuming and resource-intensive, especially for large datasets.

The hand-tuned sentiment analysis shows a much more realistic distribution of sentiments, with 57% negative, 9% neutral, and 34% positive. This is likely a more accurate representation of the actual sentiments in the dataset.

Llama 31.b was a lot closer to the hand tuned analysis.

In contrast, ChatGPT and TextBlob are both significantly off the mark. ChatGPT seems to be overly optimistic, with only 7% negative sentiment and 75% neutral (which might not even be possible in reality). TextBlob is also off, but in a different way, with 28% negative and 38% positive.

This highlights the limitations of automated sentiment analysis tools and the importance of human oversight and validation. Even when used as a starting point or to augment other approaches, these models may not provide accurate results without careful evaluation and fine-tuning.

Top